Medical Speech Recognition: Transforming Healthcare Today

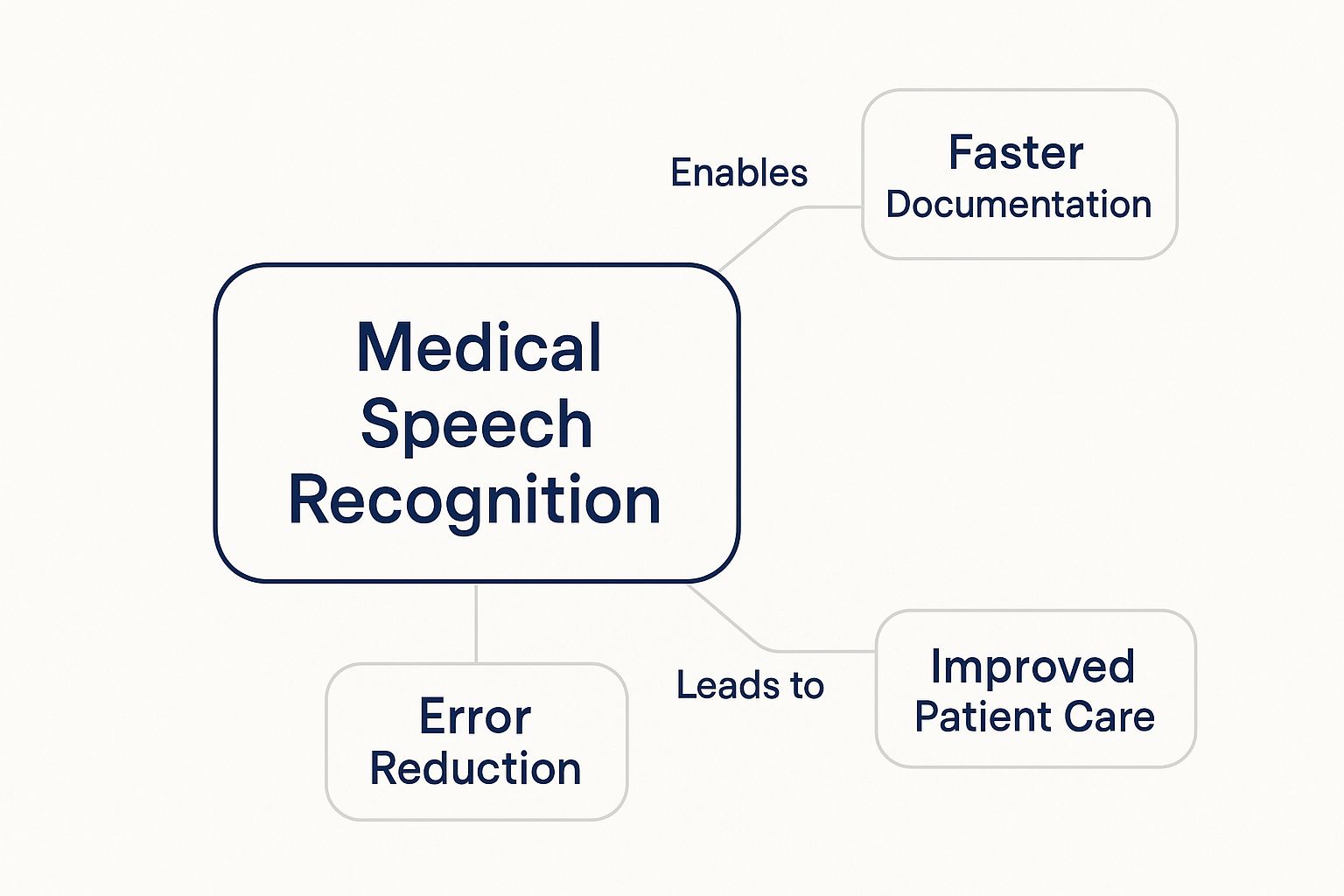

At its core, medical speech recognition is a specialized AI that translates spoken clinical language into written text. Think of it as a highly intelligent scribe that instantly captures conversations, notes, and diagnoses, cutting down the huge amount of time clinicians spend typing. It understands the complex language of medicine, creating accurate electronic health records (EHRs) and other crucial documents on the fly.

The End of Clinical Documentation Overload

For so many doctors, nurses, and other healthcare professionals, the workday doesn't stop when they see their last patient. It often drags on for hours, bogged down by a mountain of clinical documentation. This isn't just a minor annoyance; it's a major contributor to burnout and pulls them away from what truly matters—caring for patients.

Picture this: a doctor spends an average of 16 minutes per patient encounter just updating their electronic health records. That time adds up fast. This administrative weight is a massive drain on the entire healthcare system.

This is exactly the problem that medical speech recognition was built to fix. It gives clinicians a direct way to get those hours back, turning the chore of typing into a simple, natural conversation with a computer.

More Than Just Voice-to-Text

It's really important to distinguish this technology from the voice assistant on your smartphone. Consumer tools just can't keep up with the unique language of medicine. Medical speech recognition, on the other hand, is built from the ground up with deep clinical knowledge.

These systems are powered by massive medical vocabularies and AI models specifically trained to grasp:

- Complex Terminology: Everything from drug names and anatomical parts to specific procedural codes.

- Contextual Nuances: It knows the difference between "aural" and "oral" based on the rest of the conversation.

- Varied Accents and Pacing: The technology adapts to how individual clinicians actually speak.

Because of this specialized training, today's leading systems can hit accuracy rates of 99% or higher. That’s as good, or even better, than human transcriptionists, but with the results delivered in an instant.

This technology represents a fundamental shift from simple dictation to intelligent documentation. It’s not just about converting words to text; it’s about understanding clinical intent and streamlining the entire workflow.

A Market Driven by Necessity

The surge in adopting this technology isn't just a passing trend. It’s a direct response to a very real need. As healthcare continues its digital shift, the demand for smarter documentation tools has exploded.

The numbers tell the story. The market was valued at USD 1.73 billion and is expected to climb to USD 5.58 billion by 2035. This growth is being driven by the undeniable benefits of AI-powered efficiency and the rise of telemedicine. You can explore more about the market's growth and see what it means for the future of healthcare.

In this guide, we’ll take you through everything you need to know about medical speech recognition. We'll break down how the AI works, show its impact on patient care and daily workflows, and offer a clear roadmap for anyone ready to break free from the paperwork grind.

How AI Translates Speech into Clinical Notes

It might seem like a bit of magic, the way medical speech recognition turns a doctor's spoken words into a perfectly formatted clinical note. But it’s not magic—it's a methodical process, driven by some seriously smart AI. The best way to think about it is as a highly trained digital scribe who can listen, comprehend, and type at superhuman speeds. The technology isn't just hearing sounds; it's understanding the dense, nuanced language of medicine.

The entire process boils down to three core components that work in harmony. Each piece has a specific job, and when they come together, they ensure that what a clinician says is precisely what gets logged in the patient's chart. Let's pull back the curtain and see how it works.

Acoustic Modeling: The AI’s Ears

The first step is all about listening. This is where Acoustic Modeling comes in, acting as the system’s ears. Its one and only job is to take the raw audio of a clinician's voice—just a stream of sound waves—and chop it up into the smallest units of sound in a language, known as phonemes.

For instance, when a doctor says the word "fracture," the acoustic model doesn't process the entire word at once. Instead, it meticulously identifies the individual sounds: 'f,' 'r,' 'a,' 'k,' 'ch,' 'er.' These models are trained on thousands of hours of speech from countless speakers, so they get incredibly good at picking out these sounds, even if the speaker has a thick accent, talks quickly, or has some background noise. This is the foundational step that turns messy audio into the clean building blocks of language.

Language Modeling: The AI's Brain

With the sounds identified, the baton is passed to Language Modeling. This is the predictive brain of the operation. It takes that sequence of phonemes and starts calculating the most likely words and phrases they form. Crucially, it does this with a deep-seated understanding of medical context.

This is where the real intelligence of the system shows up. Let's say the acoustic model picks up sounds that could be either "oral" or "aural." A standard dictation tool might get it wrong. But the medical language model looks at the surrounding words for clues. If the dictation is about an ear infection, it knows to select "aural." If it's about medication, it confidently picks "oral."

This predictive power is what truly sets medical speech recognition apart. The AI isn't just making a random guess; it's using a sophisticated, probability-based map of clinical language to land on the correct term, every single time.

This ability to understand context is what helps providers create better, faster documentation and ultimately improve patient care.

As you can see, turning speech into accurate documentation is a direct line to more efficient workflows and better patient outcomes.

The Medical Lexicon: The AI’s Dictionary

The final, indispensable piece is the Medical Lexicon. Think of it as a massive, hyper-specialized dictionary. While the language model is busy predicting word sequences, the lexicon is what provides the actual vocabulary. And we're not talking about your standard dictionary; this is a vast database packed with hundreds of thousands of medical terms.

This lexicon is incredibly comprehensive and includes:

- Pharmaceutical Names: Everything from common over-the-counter drugs to complex biologics.

- Anatomical Terms: Precise terminology for every single part of the human body.

- Procedural Codes: Specific language required for accurate records and billing.

- Common Acronyms: It knows that "MI" in a cardiology note means myocardial infarction, not mitral insufficiency.

This built-in dictionary ensures that when the AI thinks it hears "myocardial infarction," it has the correct term ready to go. It prevents the system from making embarrassing mistakes by substituting a similar-sounding, non-medical word—a common pitfall for general-purpose voice assistants.

You can learn more about how specialized tools stack up in our complete guide to medical speech recognition software. By weaving these three elements together, the AI translates speech to text with an accuracy that can hit 99%, putting it on par with the best human transcriptionists.

Improving Patient Care and Clinical Workflows

It’s one thing to understand the mechanics of medical speech recognition, but it’s another to see how it genuinely changes the game in a hospital or clinic. This isn't just another efficiency tool. It fundamentally rebalances a clinician's day, pulling focus away from the keyboard and putting it back where it belongs: on the patient.

By automating one of the most draining parts of the job, speech recognition creates a positive ripple effect. It touches everything from faster decision-making to a better patient experience. The biggest win? It gives clinicians back their time.

Reclaiming Time and Reducing Clinician Burnout

The administrative burden on healthcare professionals is crushing. While electronic health records (EHRs) have been great for centralizing data, they've also chained clinicians to their computers. For a sense of scale, the U.S. hospital EHR adoption rate has hit 97.4%, and with it, the pressure to document everything has skyrocketed.

This is a direct contributor to burnout. In fact, over 33% of healthcare systems are now piloting ambient documentation tools powered by voice AI just to give their staff some breathing room.

Simply put, doctors can talk much faster than they can type. By swapping the keyboard for a microphone, they can capture detailed patient encounters in real time, turning hours of typing into mere minutes of speaking.

This speed delivers some major advantages:

- Less "Pajama Time": It drastically cuts down on the after-hours work clinicians do at home just to catch up on charts.

- More Patient Time: With documentation handled more quickly, doctors can see more patients without feeling rushed or overwhelmed.

- Better Engagement: Clinicians can finally maintain eye contact and truly listen to their patients instead of staring at a screen.

This isn't just about shaving a few minutes off the clock. It's a powerful tool in the fight against the burnout that’s so rampant in medicine.

Enhancing the Quality of Clinical Documentation

Beyond just speed, medical speech recognition boosts the quality of clinical notes in a big way. When a clinician is typing under pressure, they naturally fall back on shorthand, abbreviations, and choppy phrases. This can make the notes confusing for anyone else on the care team, creating risks for patient safety.

Dictation, on the other hand, encourages a more natural, narrative style. When a doctor can just speak about the patient visit, they capture more context, nuance, and critical details.

A more detailed clinical note leads to better clinical decisions. When the patient's story is captured in its entirety, the entire care team has a clearer picture, reducing the risk of medical errors and improving diagnostic accuracy.

This richer detail also pays dividends for billing and compliance. Thorough documentation provides solid support for medical coding, resulting in more accurate billing and fewer rejected claims. It also creates a stronger medical record that holds up during audits. For a deeper dive into this, check out our complete guide on documentation workflow management.

To put these benefits into perspective, let's look at the numbers. The following table breaks down the tangible improvements seen when shifting from manual typing to a voice-powered documentation system.

Impact of Medical Speech Recognition on Healthcare Metrics

| Area of Impact | Traditional Method (Manual Typing) | With Medical Speech Recognition | Potential Improvement |

|---|---|---|---|

| Documentation Time | 2-4 hours per day | 30-60 minutes per day | 75% reduction |

| Note Completeness | Often relies on templates and shorthand | Captures full narrative and nuance | 40% increase in detail |

| Billing Accuracy | Prone to coding errors from incomplete notes | Supports more accurate CPT/ICD codes | 15-20% reduction in claim denials |

| Clinician Burnout | High levels of "pajama time" and fatigue | Less after-hours work, more patient focus | Significant decrease in burnout scores |

As the data shows, the impact is substantial across the board, improving both operational efficiency and the well-being of the clinical staff.

Creating a More Fluid and Mobile Workflow

Modern healthcare is rarely a desk job. Clinicians are constantly moving between exam rooms, hospital wings, and even different buildings. Medical speech recognition is built for this reality, untethering documentation from the workstation.

Many of today's best platforms offer powerful mobile apps, allowing clinicians to finish their notes from anywhere.

Think about a doctor who just finished a patient visit. Instead of having to find a computer to type up the note, they can simply dictate it into their phone while walking to the next room. This turns those in-between moments into productive time, ensuring notes are completed while the details are still fresh in their mind. This kind of flexibility is essential for creating an efficient, patient-first workflow in any healthcare environment.

Seeing Speech Recognition in Action

It’s one thing to talk about the technology, but it’s another to see how medical speech recognition actually works on the ground, in the real world of clinical care. This isn't some far-off future concept; it's already changing how healthcare professionals get their work done, from the chaos of a hospital ward to the focused quiet of an imaging lab.

These tools are much more than just digital dictaphones. They’ve become intelligent partners that can capture the full, nuanced story of patient care with remarkable speed and accuracy. Let’s look at a few places where this technology is already making a huge impact.

Transforming Radiology Reporting

If there’s one field where speed and precision are non-negotiable, it’s radiology. Radiologists spend their days interpreting incredibly detailed scans—X-rays, CTs, MRIs—and documenting every single finding. Any delay in getting that report out can directly affect a patient's treatment.

Picture a radiologist looking at a complex chest CT. Instead of constantly stopping to type or click through clunky software templates, they can just speak what they see, as they see it.

- Real-Time Dictation: They might say, "Findings consistent with bilateral pneumothorax, more significant on the right side. No evidence of tension." The system understands and transcribes this specialized language on the spot.

- Structured Data Entry: With voice commands like "insert finding" or "next section," the software smartly places the information into the right fields of the report.

- Immediate Distribution: As soon as they finish speaking, the report can be finalized and sent straight to the patient’s EHR and the referring physician. This cuts the turnaround time from hours down to just minutes.

This isn't just about being faster. It allows the radiologist to keep their eyes and their focus locked on the images, which ultimately leads to a more careful and accurate diagnosis.

Streamlining Primary Care Encounters

In a busy primary care office, time is the most valuable resource. A doctor might see 20 or 30 patients in a single day, each with their own story. Documenting every visit is essential, but it’s also a massive time sink that often pits the doctor’s attention against the patient’s.

Medical speech recognition helps solve this dilemma. Think about a family doctor seeing a patient for a routine follow-up on their chronic hypertension. While talking with the patient, they can quietly capture the important information with their voice.

The doctor can keep making eye contact and have a normal conversation while dictating something like, "Patient reports good adherence to lisinopril. Blood pressure is 130 over 85. Plan to continue current medication and recheck in three months." All of this goes directly into the patient's note in the EHR.

This fundamentally changes the visit. The patient feels like they have their doctor's full attention, and the doctor gets to avoid "pajama time"—those extra hours spent catching up on charting at home. It also makes other tasks, like writing referral letters or sending prescriptions, much easier because the core information is already captured. For clinics looking to integrate these tools, good electronic health records training is the perfect starting point.

The Next Frontier: Ambient Clinical Intelligence

The most exciting development in this space is what’s known as ambient clinical intelligence. This takes speech recognition to a whole new level, shifting from a doctor actively dictating to a system that passively listens.

Here’s how it works: an AI-powered device in the exam room securely and unobtrusively captures the natural conversation between the doctor and patient.

The system is smart enough to pull out all the clinically important details—symptoms, history, exam findings, and the final treatment plan. After the appointment, it presents the doctor with a completely drafted, well-organized clinical note. All the doctor has to do is review it, make any necessary tweaks, and sign off.

This nearly wipes out manual data entry. The clinician’s job changes from being a scribe to being an editor, freeing them up to focus 100% of their energy on caring for the patient.

How to Choose and Implement the Right Solution

Bringing a medical speech recognition system into your practice is a big move, one that promises to give clinicians back their time and dramatically improve documentation. But like any powerful tool, its success depends entirely on choosing the right one and rolling it out with a clear plan. Think of it like prescribing a new medication: you have to ensure it’s the right fit for the patient’s specific condition and then provide a clear regimen for taking it.

The market for this tech is booming. Valued at roughly USD 1.68 billion, it’s expected to explode to around USD 5.32 billion by 2035, growing at a rate of about 11.05% each year. This isn't just a fleeting trend; it’s a clear sign that healthcare is hungry for smarter, more efficient tools. You can get a closer look at these numbers and learn more about its projected growth.

With so many options popping up, a structured evaluation is more critical than ever.

Key Criteria for Selecting Your System

Not all speech recognition platforms are built for the unique demands of healthcare. Before you sign on the dotted line, you need to zero in on three non-negotiable pillars: accuracy, integration, and security. Nailing these three will lay the groundwork for a system your team will actually want to use.

Here’s what your checklist should look like:

- Exceptional Medical Accuracy: The system needs a deep, specialty-specific vocabulary baked right in. Don't just take their word for it—test it yourself. Use your most complex terms, have providers with different accents try it out, and see how it performs in a noisy clinic. You're aiming for 99% accuracy or better from day one.

- Seamless EHR Integration: The software must play nicely with your existing Electronic Health Record (EHR) system. The goal is to let clinicians dictate directly into any field within the EHR, without clunky workarounds that disrupt their flow.

- Ironclad Security and HIPAA Compliance: This is a deal-breaker. Confirm the vendor uses end-to-end encryption for all data, whether it's being sent or just sitting on a server. They must also be ready and willing to sign a Business Associate Agreement (BAA), which is your legal assurance that they're serious about protecting patient health information (PHI).

As you weigh your options for medical dictation, it’s smart to look at a range of speech recognition tools, including systems that present an affordable alternative to Dragon Naturally Speaking.

A Phased Implementation Plan for Success

Once you’ve picked your vendor, the real work begins. A successful launch is about much more than just installing software; it requires a thoughtful, phased approach that gets your clinical staff on board. If you rush it, you’ll face resistance. But if you manage it well, you'll build champions.

Follow these steps for a much smoother rollout:

- Conduct a Needs Assessment: First things first, figure out where the documentation pain is most acute in your practice. Is it the ER, a specific specialty, or a particular role? Knowing the real-world challenges helps you set clear goals for what you want the new system to achieve.

- Launch a Pilot Program: Find a small group of tech-savvy, enthusiastic clinicians to be your guinea pigs. Let them test the software in their day-to-day work. Their feedback will be gold, helping you spot workflow kinks and turning them into advocates who can vouch for the technology with their peers.

- Provide Comprehensive Staff Training: Even though modern systems like Whisperit are incredibly intuitive, proper training is still essential. Don’t just show them the features; show them how the tool solves their specific problems and saves them time on the tasks they do a dozen times a day.

- Monitor and Gather Feedback: After going live, keep a close eye on usage and actively ask for feedback. Use what you learn to offer more support, tweak workflows, and—most importantly—share clear metrics that show the positive impact, like how much documentation time has been saved.

A successful implementation is not just a technical project; it's a change management initiative. Securing clinician buy-in from the very beginning is the single most important factor in realizing the full benefits of medical speech recognition.

By carefully selecting a solution that fits your clinical reality and following a clear implementation plan, you can finally turn documentation from a daily chore into a seamless, efficient part of patient care.

Navigating Security and HIPAA Compliance

In healthcare, nothing is more sacred than the trust between a patient and their provider. Protecting sensitive health information is the legal and ethical bedrock of that trust. So, when you bring any new technology into the mix—especially one that listens to and processes patient conversations—security can't be an afterthought. It has to be the foundation.

For any medical speech recognition tool, compliance with the Health Insurance Portability and Accountability Act (HIPAA) is absolutely non-negotiable.

This means that from the second a doctor speaks into a microphone to the moment that data is stored, every bit of it must be locked down. Think of it like a digital armored car for patient data; the entire journey has to be protected, without a single weak link.

The Pillars of a Secure System

A truly HIPAA-compliant speech recognition platform is built with layers of defense. These safeguards work in concert to create a fortress around Protected Health Information (PHI), keeping it confidential and untampered with. For any healthcare leader, understanding these data security compliance strategies is a must before signing off on new tech.

Here’s what to look for:

- End-to-End Encryption: This is the gold standard. It scrambles voice data so it’s completely unreadable to anyone who shouldn't have access, both when it's traveling over the internet and when it’s sitting on a server.

- Secure Data Centers: The physical location where data is stored matters. We're talking about facilities with Fort Knox-level security, tight access controls, and 24/7 monitoring to stop breaches before they start.

- Strict User Access Controls: Not everyone in a hospital needs to see every patient's chart. A good system lets you set up specific user roles and permissions, ensuring clinicians only access the information they need to do their jobs.

The Importance of a Business Associate Agreement

Technical safeguards are only half the battle. You also need a critical legal document: the Business Associate Agreement (BAA).

This is a formal contract between your healthcare organization and a vendor (like a speech recognition provider) that will handle your PHI. A BAA legally binds the vendor to the same tough HIPAA security standards you’re held to.

A BAA is your assurance that your technology partner is just as committed to protecting patient privacy as you are. Never, ever entrust a vendor with patient data without a signed BAA in place.

At the end of the day, modern medical speech recognition platforms aren't just built for accuracy—they're engineered for security from the ground up. By partnering with vendors who live and breathe these principles, healthcare organizations can bring in voice technology as a safe, trustworthy, and incredibly powerful tool.

To dig deeper into this crucial topic, check out our guide on finding HIPAA compliant speech-to-text solutions.

Common Questions About Medical Speech Recognition

Let’s be honest—adopting any new technology in a clinical setting brings up a lot of practical questions. When it's something as critical as your documentation workflow, you need clear, straightforward answers.

Here’s a breakdown of the most common questions we hear from clinicians about medical speech recognition, designed to clear up the confusion around accuracy, EHR integration, and the learning curve.

How Accurate Is It, Really?

This is usually the first question on everyone's mind: can an AI really keep up with the nuances of medical terminology? The short answer is yes. Today’s top-tier systems consistently hit 99% accuracy rates, which is right on par with what you’d expect from an expert human transcriptionist.

But the real advantage isn't just accuracy—it's speed and adaptability. The transcription happens in real-time, completely eliminating the delays you get with traditional transcription services. Even better, the AI learns from your corrections, so it gets progressively better at understanding your specific accent, speaking style, and the unique jargon of your specialty.

The days of worrying about speech recognition accuracy are behind us. Modern systems are trained on massive medical vocabularies and sophisticated AI, ensuring what you say is exactly what gets documented.

Will This Integrate with My EHR?

Nobody wants a tool that creates more work. That's why seamless integration is non-negotiable. Leading medical speech recognition platforms are built to play nicely with all major EHRs, including Epic, Cerner, and eClinicalWorks, so your workflow stays smooth.

So how does it actually work? There are a couple of common ways:

- Direct Dictation: The simplest method allows you to dictate directly into any text field in your EHR. If you can type in it, you can speak into it.

- API Connections: Deeper integrations use APIs to enable more advanced features, like structured data entry and voice commands that can navigate the EHR itself.

Before you commit to any solution, make sure to confirm its specific integration capabilities with your EHR system. It's a crucial step.

What Is the Training Process Like?

The thought of a steep learning curve can be a major roadblock, but modern platforms are designed to be incredibly intuitive. Most clinicians are comfortable and proficient after a single short training session.

The focus is usually on simple workflow habits and a handful of basic voice commands like "new paragraph" or "sign note." The best systems don't even require you to create a voice profile anymore—you can start dictating accurately from day one. This lets you focus on improving clinical documentation without getting bogged down by technical setup.

Ready to cut your documentation time in half and refocus on patient care? Discover how Whisperit provides unparalleled accuracy and seamless integration to transform your clinical workflow. Learn more at whisperit.ai