How to Test Security Controls A Practical Guide

Testing your security controls isn't just a technical task; it’s a mindset. It's about systematically planning your attack, executing it with the right tools, digging into the results to find the weak spots, and then using that knowledge to build a stronger defense. You have to be proactive and validate that your security measures actually work before a real attacker proves they don't.

Why We Can't Just "Set It and Forget It" Anymore

In the early days of IT security, the approach was pretty reactive. You'd set up a firewall, install some antivirus software, and basically assume you were safe until an alarm went off. That kind of thinking today is a guaranteed way to get into trouble. The modern threat environment forces us to be proactive—to constantly question and test our own defenses.

This isn't just an IT problem; it's a fundamental business issue. An unverified control is just an assumption, and in cybersecurity, assumptions are incredibly dangerous. Think about a retail company launching a new payment portal. If they just assume it's secure, a single undiscovered flaw could lead to thousands of stolen customer records. The fallout from that kind of mistake—both financially and in terms of trust—can be devastating.

Moving Beyond the Checklist

Knowing how to properly test your security controls is about more than just ticking boxes on a compliance sheet. It forces you to answer the one question that should keep every CISO up at night: "Are we actually secure, or do we just feel secure?" Answering that question honestly is why you need a structured testing program that treats security as a continuous cycle, not a one-time project.

The market data backs this up. The global security testing market didn't just grow; it exploded from $7.47 billion in 2019 to $13.15 billion in 2023. This isn't a fluke. It's a direct reaction to the relentless wave of cyberattacks hitting everything from critical infrastructure to small businesses, pushing everyone to invest more in making sure their security frameworks are solid.

This hands-on approach delivers some serious benefits:

- Finds real-world gaps: Testing uncovers the kind of vulnerabilities that a purely theoretical assessment would completely miss.

- Validates your spending: It proves that the expensive tools and systems you've invested in are configured correctly and doing their job.

- Drives improvement: Every test cycle gives you concrete data to make your defenses stronger over time.

- Makes compliance easier: A solid testing program is a huge part of meeting regulatory demands. Using compliance management tools can help you track and report on these activities much more efficiently.

The real goal isn't just to find flaws. It's to build a resilient security culture by creating a feedback loop: test, find, fix, and then test again. Each cycle makes your organization that much harder to breach.

Understanding the Core Testing Philosophies

Before you start running tests, you need to understand the different mindsets you can adopt. Each testing philosophy mimics a different kind of attacker—from a complete outsider who knows nothing about your network to a privileged insider who knows everything. Choosing the right one sets the stage for what you're trying to achieve.

To make this clearer, let's break down the three main approaches. Each has a different starting point and a unique goal, so picking the right one is key to getting valuable results.

Core Security Testing Philosophies Explained

| Testing Philosophy | Tester's Knowledge | Objective | Best For |

|---|---|---|---|

| Black-Box Testing | None. The tester has zero internal knowledge of the system, just like a typical external attacker. | To simulate an opportunistic, external attack and find vulnerabilities that are exposed to the outside world. | Simulating external threats and finding easily exploitable, public-facing vulnerabilities. |

| White-Box Testing | Complete. The tester has full access to source code, architecture diagrams, and system documentation. | To conduct a thorough, deep-dive audit of the code and infrastructure from an insider's perspective. | Performing in-depth code reviews, identifying complex logic flaws, and assessing internal systems. |

| Grey-Box Testing | Partial. The tester has some information, like user-level login credentials, but no access to the source code. | To mimic an attack from a user with some level of privilege or an attacker who has already breached the perimeter. | Testing for privilege escalation vulnerabilities and understanding what a compromised user account could do. |

Ultimately, you'll likely use a mix of these philosophies. A comprehensive testing strategy often starts with a black-box test to find the low-hanging fruit, followed by more targeted grey-box and white-box assessments to dig deeper.

Building Your Security Control Testing Framework

A successful testing program starts with a solid plan, not a frantic scramble. Jumping into tests without a clear strategy is like trying to navigate a new city without a map—you might get lucky and stumble onto something interesting, but you’ll waste a ton of time and miss all the important landmarks. A testing framework is that map. It gives your efforts direction and purpose.

This framework is your blueprint for building a testing program that's repeatable, measurable, and genuinely effective. It’s about answering the fundamental questions: what are we testing, why does it matter, and how will we prove our defenses actually work? Without this foundation, your testing efforts will feel chaotic, inefficient, and will be incredibly difficult to justify to leadership.

Defining Your Scope and Critical Assets

The first real step is figuring out your scope. Let's be honest, you can't test everything with the same level of intensity, so you have to prioritize. This starts by identifying your most critical assets—the data, systems, and processes that are absolutely essential to keeping the lights on.

Don't just think about servers and databases. Your critical assets are often much more than that. They could be:

- Sensitive Data: Think PII, payment card info (PCI), intellectual property, or patient health records (PHI). This is the crown-jewel data.

- Essential Systems: The ERP system that runs your finances, the CRM holding all your customer data, or the production control system managing the factory floor.

- Business Processes: Your online checkout flow, the new employee onboarding workflow, or your supply chain management system.

Once you have this list, you can start mapping relevant threats to each asset. An attacker’s motive for hitting your customer database is completely different from their reason for disrupting your manufacturing operations. Understanding these threat scenarios helps you pinpoint which security controls are the most important ones to test. For example, a control designed to stop data exfiltration is a much higher priority for your PII than one that just ensures uptime.

Building a solid framework really starts with understanding the risks your organization faces. A comprehensive cybersecurity risk assessment template is a massive help here, as it helps you document threats and align your controls directly to them.

Mapping Controls to Your Assets

With your critical assets identified and threats mapped out, the next logical step is to connect them to the specific security controls designed to protect them. This isn’t just about making a list of your firewalls and antivirus software; it’s about truly understanding the function of each control in the context of risk.

A security control is simply a safeguard or countermeasure you put in place to manage or reduce risk. The goal isn't just to have controls, but to ensure they are effective at preventing, detecting, and responding to security incidents.

Let’s take your customer database, for example. It’s likely protected by several layers of controls:

- Preventive Controls: Access control lists (ACLs) that dictate who can even query the database, plus encryption that protects the data at rest.

- Detective Controls: A SIEM system that logs and alerts on suspicious query patterns or unusual activity.

- Responsive Controls: An incident response plan that spells out exactly how to react if a breach is detected.

Mapping these out creates a clear line of sight from a business-critical asset all the way down to the technical safeguards protecting it. For a much deeper dive into organizing these elements, our guide on creating a security control framework offers a great, structured approach.

Creating a Realistic Testing Schedule

Finally, your framework needs a realistic testing schedule. While "continuous testing" sounds great, the reality for most of us is a practical mix of automated scans, periodic assessments, and deep-dive manual tests. A good schedule balances security needs with operational realities.

Think about these factors when planning your cadence:

- Risk Level: High-risk assets, like your public-facing web applications, demand far more frequent testing than low-risk internal systems.

- Compliance Requirements: Regulations like PCI DSS or HIPAA often dictate specific testing frequencies, such as annual penetration tests. You don't have a choice on those.

- Rate of Change: If an application is updated weekly, its testing cadence needs to be much faster than that of a legacy system that rarely changes.

Getting buy-in from stakeholders is the final, crucial piece. Frame the conversation around risk reduction and business enablement, not technical jargon. Explain that by validating security controls, you are protecting revenue, safeguarding the brand's reputation, and ensuring the business can operate without interruption. This approach transforms testing from a line-item cost into a strategic investment.

Choosing the Right Testing Methods and Tools

Once your planning framework is solid, it's time to get your hands dirty. This is the part where you move from theory to practice, deciding how you're actually going to test your security controls. It’s all about picking the right combination of methodologies and tools for the job.

Let’s be clear: not all tests are created equal. Choosing the wrong approach is a fast way to waste a lot of time and, worse, get results that give you a false sense of security. The key is to match your testing method to your specific goals. Are you casting a wide net to find known vulnerabilities across your network? Or are you simulating a surgical strike on a high-value application? The answers will point you in the right direction.

Matching the Method to the Mission

Think of different security testing methods like tools in a mechanic's toolbox. You wouldn't use a sledgehammer to tune an engine, and you shouldn't use a basic vulnerability scan to pressure-test a critical payment application. Understanding the strengths of each approach is fundamental to building a program that actually works.

Here’s a quick rundown of the main players:

- Vulnerability Scanning: This is your first line of defense. Automated scanners are fantastic for quickly checking your systems against huge databases of known vulnerabilities. They provide broad, continuous coverage and are great for catching the "low-hanging fruit" before it becomes a real problem.

- Penetration Testing (Pentesting): This is a much more focused, hands-on effort. A real human expert actively tries to exploit vulnerabilities to see just how far they can get into your systems. It’s a simulation of a real-world attacker, designed to find complex flaws that automated tools almost always miss.

- Red Teaming: Think of this as a full-blown attack simulation. A red team acts as a genuine adversary, using whatever means necessary—technical exploits, social engineering, even physical access—to achieve a specific objective, like exfiltrating sensitive data. This tests your entire security posture: technology, people, and processes.

- Breach and Attack Simulation (BAS): These platforms bring automation to the fight, continuously running thousands of simulated attacks. BAS tools give you a near real-time dashboard showing how your controls are holding up against the latest threat tactics, all without the operational risk of a live test.

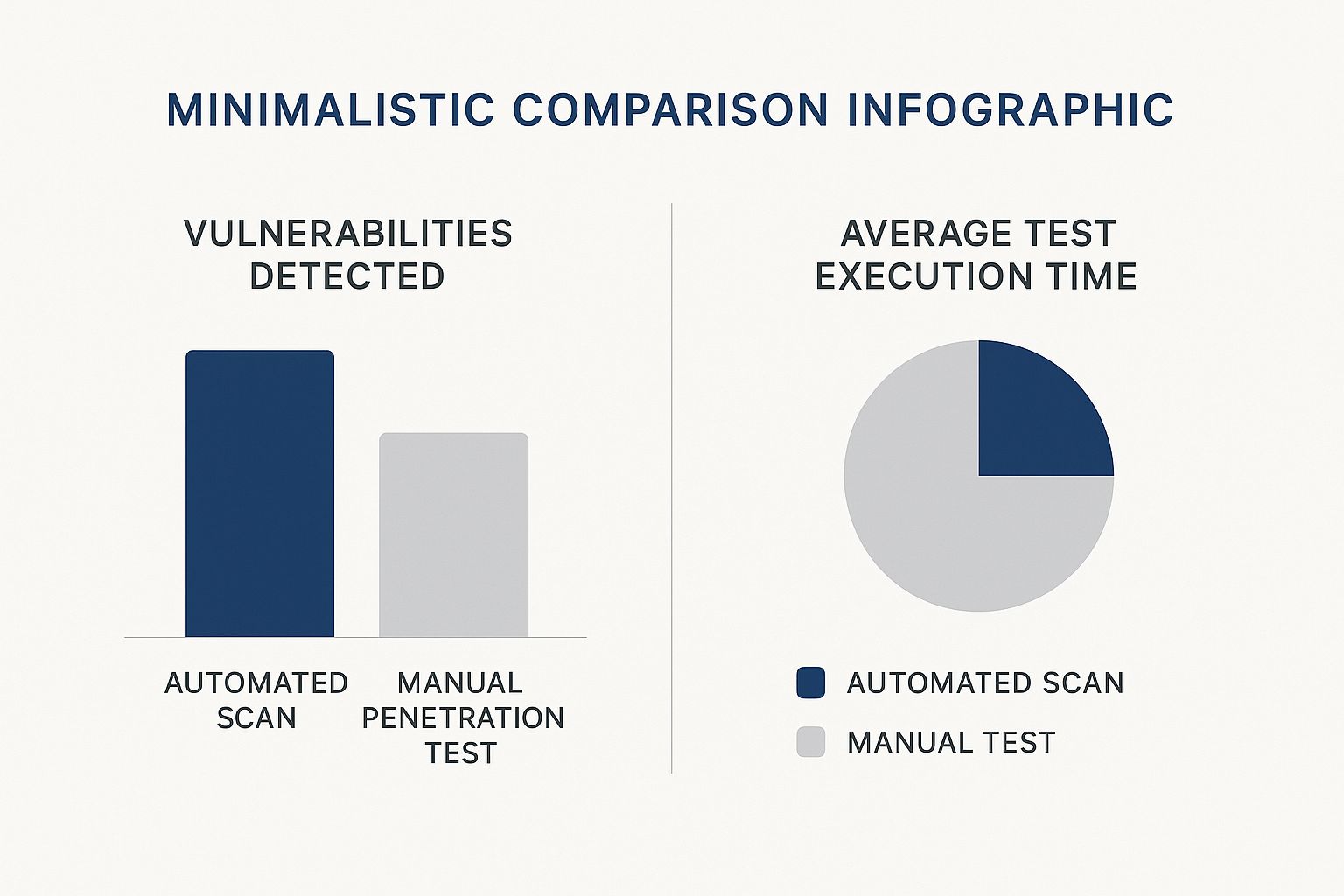

The data below shows the classic trade-off: automated scans are fast, but they often can't find the critical, business-logic vulnerabilities that a manual penetration test can uncover.

This really drives home the point that while automation gives you speed and scale, you still need human expertise for depth. That’s where you find the most impactful security gaps.

Comparison of Security Testing Methodologies

To make sense of these options, it helps to see them side-by-side. Each methodology has a distinct purpose and is best deployed at different times and for different reasons.

| Methodology | Primary Goal | Typical Frequency | Ideal Use Case |

|---|---|---|---|

| Vulnerability Scanning | Identify known vulnerabilities across a broad set of assets | Continuous or Weekly/Monthly | Maintaining baseline security hygiene and compliance. |

| Penetration Testing | Uncover exploitable flaws in a specific application or system | Annually or Before Major Launches | Securing critical systems like web apps or APIs. |

| Red Teaming | Test the organization's overall detection and response capabilities | Every 1-2 Years | Evaluating the maturity of your security operations (SOC). |

| Breach and Attack Simulation (BAS) | Continuously validate security control effectiveness against specific threats | Ongoing / Real-Time | Getting constant feedback on control performance and gaps. |

This table isn't about picking one "best" method. A mature security program layers these approaches to create a defense-in-depth testing strategy.

Prioritizing With Real-World Scenarios

Let's ground this in reality. Say your company is about to launch a new e-commerce platform. A vulnerability scan is a sensible first step for a quick health check, but for something this critical, it’s nowhere near enough. This is a textbook scenario for a deep-dive penetration test. The mission is to have an expert ethically hack the platform to find flaws in the payment gateway or customer data storage before a real attacker does.

Given that a staggering 73% of successful corporate breaches in 2023 involved exploiting web application vulnerabilities, a pentest isn’t just a good idea—it's essential. It's purpose-built to find exactly those kinds of weaknesses.

Now, picture a different situation. You need to know if your Security Operations Center (SOC) can actually detect and shut down a sophisticated, multi-stage attack. This is where a red team exercise is invaluable. The red team’s goal isn’t just to find one vulnerability; it's to test your entire defensive chain, from the initial alert to the final incident response.

Pro Tip: Don't think of these methods as an either/or choice. The best security programs blend them. A common recipe is continuous vulnerability scanning on all assets, annual penetration tests on critical apps, and a full-scope red team exercise every 18-24 months.

Navigating the Crowded Tool Market

Once you’ve settled on your methods, you need the right tools. The market is flooded with options, from powerful open-source projects to enterprise-grade commercial platforms.

For anyone just getting started with web application testing, a fantastic open-source tool is the OWASP Zed Attack Proxy (ZAP). It’s incredibly versatile, supporting both automated scanning and manual pentesting efforts. It’s a great way to build skills without a huge budget. For those running on WordPress, having powerful WordPress vulnerability scanner tools is also an invaluable resource for hardening one of the web's most popular platforms.

On the commercial front, platforms from vendors like Tenable, Qualys, and Rapid7 offer comprehensive vulnerability management. These tools are built for larger organizations that need robust scanning, detailed reporting, and deep integrations to manage a complex attack surface. They excel at giving you a single pane of glass over everything from on-premise servers to your cloud footprint. The trick is to pick a tool that fits your team's skills, your budget, and the specific risks you're trying to manage.

Executing Tests and Analyzing the Results

Alright, this is where the rubber meets the road. Running security tests can be a nerve-wracking experience, but the real magic isn't in launching the scan—it's in what you do with the results. The goal is to turn a mountain of raw data into intelligence that actually makes you safer.

First things first: you have to conduct these tests safely. The whole point is to find holes before someone else does, not to cause an outage yourself. This means setting crystal-clear rules of engagement, especially for more hands-on tests like penetration testing. You need to define what's off-limits, establish testing windows, and have an escalation plan ready just in case. A well-communicated plan is what separates a controlled test from a chaotic incident.

From Raw Data to Actionable Insights

Once the dust settles, you're going to be looking at a lot of data. A raw vulnerability scan report can be intimidating, often listing hundreds of potential problems. Your first job is to triage these findings and weed out the false positives. You have to validate each one to confirm it's a real, exploitable issue and not just scanner noise.

After that comes the most important part: prioritization. Let's be honest, not all vulnerabilities carry the same weight. A critical flaw on a dusty old internal server is probably less urgent than a medium-risk bug on your e-commerce checkout page.

To get this right, you really need to weigh three things:

- Asset Criticality: How much does this system matter to the business? A threat to your customer database is a five-alarm fire; a bug on a non-production dev box is not.

- Exploitability: How hard is it for a real attacker to use this flaw? A simple, one-click exploit is a much bigger deal than something that requires a convoluted, multi-step attack chain.

- Potential Impact: If this gets exploited, how bad is the damage? Are we talking about minor service disruption, or are we looking at catastrophic data loss or financial theft?

Walking through this process turns a messy, overwhelming list into a focused, prioritized action plan.

Digging Deeper with Root Cause Analysis

Fixing a bug is good. Understanding why that bug was there in the first place is so much better. This is what root cause analysis is all about. It forces you to stop asking "what broke?" and start asking "why did our process allow this to break?"

Don't just patch the symptom; cure the disease. If you find a SQL injection vulnerability, the immediate fix is to sanitize the input. The root cause, however, might be that your developers lack proper secure coding training, meaning the same mistake will likely appear in the next application they build.

When you address the root cause, you're not just playing whack-a-mole—you're systemically improving your entire security posture and preventing whole categories of future vulnerabilities.

This kind of in-depth validation is why the security testing market is booming. Forecasts show it's expected to jump from USD 14.5 billion in 2024 to USD 43.9 billion by 2029. That's a massive signal that companies are finally getting serious about not just finding flaws, but truly understanding and fixing them.

Prioritizing Your Remediation Efforts

With your findings sorted and root causes identified, it's time to build a concrete remediation plan. This means assigning tickets to owners, setting firm deadlines, and tracking everything to completion. Our security audit checklist is a great resource for creating a framework that ensures nothing gets lost in the shuffle.

Here’s a practical way I've seen teams categorize their remediation work effectively:

| Priority | Criteria | Example Action |

|---|---|---|

| P1 - Critical | Exploitable vulnerability on a critical, internet-facing system. | Patch immediately within 24-48 hours. |

| P2 - High | Serious flaw on a critical internal system or a moderate flaw on an external one. | Remediate within the next 1-2 weeks. |

| P3 - Medium | Vulnerability with limited impact or one that is difficult to exploit. | Schedule fix for the next planned maintenance cycle. |

| P4 - Low | Informational finding or a best practice recommendation with low risk. | Add to the technical debt backlog for future consideration. |

This kind of systematic approach guarantees your team's limited time and energy are always aimed at the threats that pose the biggest risk to the business. This is how you turn test results into genuine, measurable security improvements.

Turning Findings Into Actionable Improvements

A security test report is useless if it just sits on a server collecting digital dust. The real value comes after the testing is done, when you take those findings and turn them into a concrete plan for improvement. This is where you shift from analysis to action, translating technical jargon into a clear roadmap for a stronger, more resilient organization.

It all starts with how you communicate the results. I’ve seen it a hundred times: a single, dense, one-size-fits-all report gets sent out, and nothing happens. Why? Because the highly technical deep-dive your engineers need is the very thing that will make your CEO’s eyes glaze over. To actually get things done, you have to speak your audience's language.

Crafting Reports That Actually Get Read

The trick is to create different versions of your report for different people. This isn't about hiding the truth; it's about framing it in a way that resonates with each group and motivates them to act.

Think of it this way—you need two distinct documents:

- The Technical Deep-Dive: This is for your engineers, developers, and IT staff. It needs to be packed with every relevant detail: the specific vulnerabilities, the affected systems, exact steps to replicate the issue, log snippets, and straightforward guidance for fixing the problem. The goal is to give them everything they need to find, understand, and squash the bug.

- The Executive Summary: This report is for leadership, the board, and other business stakeholders. Keep it concise and strategic. Ditch the technical jargon and translate the findings into business risk. For instance, instead of saying "SQL injection vulnerability," frame it as "a security flaw that could expose all 450,000 of our customer records, leading to serious regulatory fines and lasting brand damage."

An effective executive summary answers three simple questions: What did we find? Why should the business care? What are we going to do about it? When you connect security issues to business risk and ROI, you elevate them from a technical problem to a business priority.

Building and Managing a Remediation Plan

Once you've shared the findings, it's time to build a structured plan to fix them. This is where many organizations drop the ball. Without clear ownership and a solid process, critical vulnerabilities can easily fall through the cracks. A well-organized remediation plan is your best defense against that.

Ultimately, security testing should drive continuous improvement, ensuring your defenses are always evolving to keep up with new threats. Your remediation plan isn't just about patching bugs—it's a critical feedback mechanism for your entire security program.

A strong plan really comes down to these core elements:

- Smart Prioritization: Use that risk-based approach we talked about earlier—factoring in criticality, exploitability, and potential impact—to rank every single finding. Not all vulnerabilities are created equal, and your team's time is a precious resource.

- Clear Ownership: Assign each vulnerability to a specific person or team. Ambiguity is the enemy of progress. If everyone is responsible, then no one is.

- Firm Deadlines: Based on priority, set realistic but firm timelines for remediation. A critical flaw might demand a 24-48 hour SLA, while a low-risk issue can be scheduled for the next software release.

- A Solid Tracking System: Use a ticketing system like Jira or ServiceNow, or even a dedicated vulnerability management platform, to track every finding from discovery to closure. This creates a transparent, auditable trail of your progress.

Closing the Loop with Re-Testing

You're not done until you've proven the fix actually works. The final—and most often skipped—step is to re-test. Once the dev team says a vulnerability is patched, your security team needs to go back in and try to exploit it all over again.

This validation step is absolutely non-negotiable for two big reasons:

- It confirms the fix is truly effective. I've seen patches that only address the symptom, not the root cause, leaving a different but equally dangerous path open for an attacker.

- It ensures the fix didn't break something else. It’s surprisingly common for a security patch to accidentally break existing functionality or, worse, create a brand-new vulnerability.

This cycle—test, report, remediate, and re-test—is the engine of any mature security program. It creates a powerful feedback loop that systematically shrinks your attack surface over time. This approach also reinforces your organization’s broader data security best practices, weaving security into the fabric of your development lifecycle instead of treating it as an afterthought. It's how you move from simply checking your controls to demonstrably strengthening them.

Frequently Asked Questions About Security Control Testing

Once you start moving from security theory to real-world practice, the practical questions start popping up fast. It’s one thing to have a plan on paper, but it's another to actually execute it. Let's dig into some of the most common questions our team hears when helping organizations build out their security control testing programs.

Vulnerability Scan Vs. Penetration Test

This is probably the most frequent point of confusion, and for good reason. Both are designed to find weaknesses, but they are worlds apart in approach and depth.

Think of a vulnerability scan as an automated, wide-net search. It’s like casting a massive fishing net to see what you catch. A scanner methodically checks your systems against a huge database of known, common vulnerabilities. It’s fast, gives you broad coverage, and is fantastic for catching that "low-hanging fruit"—the common misconfigurations attackers love to find first.

A penetration test (pentest), however, is a completely different beast. It's a manual, goal-driven attack simulation carried out by a skilled human. This person thinks like a real adversary, using creativity and experience to find complex business logic flaws or chain together multiple low-risk issues to create a critical threat. These are the kinds of things an automated scanner is completely blind to.

A scan tells you what might be broken. A pentest proves if it can actually be exploited to cause damage. You need both for a complete picture of your security posture.

How Often Should We Test Security Controls?

There’s no magic number here. The right testing frequency depends entirely on your specific situation. A static, low-risk internal system simply doesn't need the same attention as a public-facing payment app that’s updated every week.

To figure out the right cadence, you need to weigh a few key factors:

- Your Risk Appetite: How much risk is your organization willing to stomach? If you're in a highly regulated industry or handle incredibly sensitive data, your tolerance for risk will be near zero, which means you'll be testing far more often.

- Compliance Needs: Many regulations, like PCI DSS or HIPAA, don't leave this up to interpretation—they have specific mandates. PCI DSS, for instance, requires at least annual penetration tests and quarterly vulnerability scans. Consider these your absolute minimums.

- Rate of Change: The more your environment changes, the more you need to test. A team practicing continuous integration and deployment (CI/CD) should have automated security testing baked right into their pipeline. For a legacy system that rarely gets touched, an annual test might be perfectly fine.

As a general rule, most organizations land on a hybrid approach: continuous automated scanning on their most critical assets, backed up by in-depth penetration tests at least annually or after any major system change.

Should We Test In-House or Hire a Third Party?

Deciding whether to build an internal testing team or outsource the work is a major strategic choice, and there are good arguments for both.

In-house teams have unparalleled contextual knowledge. They know your systems, your culture, and the "why" behind your architecture. This deep familiarity allows them to test more frequently and integrate security seamlessly into the development lifecycle, which is perfect for routine checks.

On the other hand, an external third party brings something an internal team can never have: a truly unbiased perspective. They aren't influenced by internal politics or held back by assumptions about how things are "supposed to" work. These experts have honed their skills across hundreds of different environments and are often more dialed into the latest attack techniques. That outside-in view is invaluable for spotting the blind spots your internal team might miss.

Many of the most mature security programs use a hybrid model that gives them the best of both worlds:

- Use the internal team for frequent, routine vulnerability scanning and regression testing.

- Bring in external experts for deep-dive annual penetration tests and challenging red team exercises.

This strategy combines deep institutional knowledge with a fresh, objective viewpoint. Of course, when you bring in outside help, you have to do your homework. Performing a thorough third-party risk assessment is a non-negotiable step to make sure your partners aren’t accidentally introducing new risks into your ecosystem.

At Whisperit, we understand that managing sensitive information goes beyond just creating documents—it's about protecting them. That’s why our AI-powered dictation and editing platform is built on a foundation of security, featuring Swiss hosting, end-to-end encryption, and compliance with GDPR and SOC 2 standards. Transform your workflow and secure your data by visiting https://whisperit.ai to learn more.