A Guide to Artificial Intelligence Security Risks

When we talk about artificial intelligence security, we’re dealing with a totally different breed of threat. These aren't your typical network attacks that traditional cybersecurity tools are built to catch. Instead, these risks go straight for the AI's brain—its core logic—turning what should be a powerful asset into a serious liability. Getting a handle on this shift is the first step to building a defense that actually works.

Why Traditional Security Falls Short with AI

As more companies weave AI into their operations, many are learning a hard lesson: standard firewalls and antivirus software just aren't enough. Think about it. Traditional security is designed to stop known threats, like viruses with recognizable digital signatures or brute-force login attempts. It’s like a bouncer at a club checking IDs—if the credentials seem legitimate, you're in.

But AI security risks are far more subtle. They don't just bang on the front door; they manipulate the AI's learning process itself.

Imagine you're trying to teach a student using a set of textbooks. Now, what if an attacker secretly rewrote entire chapters with plausible-sounding but completely false information? The student—your AI—would learn all these flawed lessons, believing them to be true. From that moment on, it would make consistently wrong decisions, and the school’s security system would never know anything was amiss. The "attack" happened during the learning phase, completely bypassing the guards at the gate.

A New Breed of Vulnerabilities

This fundamental difference opens up a whole new can of worms that old-school security models can't handle. And with AI adoption skyrocketing, this attack surface is expanding fast. In fact, a recent report found that 74% of cybersecurity professionals see AI-powered attacks as a major challenge, with bad actors finding clever ways to exploit vulnerabilities.

Here’s a quick look at what we're up against:

- Data Poisoning: An attacker deliberately taints the training data, building a compromised model from the ground up.

- Adversarial Attacks: Someone crafts inputs that look perfectly normal to us but are specifically designed to fool an AI into making a bad call.

- Model Inversion: A clever attacker can "interrogate" a finished model to reverse-engineer it and expose the sensitive, private data it was trained on.

This new reality calls for a security strategy that goes way beyond just guarding the perimeter. We have to protect the integrity of the data, the model itself, and the entire decision-making pipeline.

To get a sense of the main threats, here’s a quick overview.

Key AI Security Risks at a Glance

| Threat Type | Primary Impact | Simple Analogy |

|---|---|---|

| Data Poisoning | Corrupts the model’s core logic during training, leading to biased or incorrect outputs. | Teaching a child from a textbook filled with subtle lies and misinformation. |

| Adversarial Attacks | Tricks a live AI model into making specific errors with carefully crafted, deceptive inputs. | Using an optical illusion to make a self-driving car misread a stop sign. |

| Model Inversion | Exposes the sensitive, private data the model was originally trained on. | Figuring out the secret ingredients in a cake just by tasting a single slice. |

| Insider Threats | A trusted person with access intentionally or accidentally compromises the AI system. | A disgruntled chef intentionally spoiling the secret family recipe. |

As you can see, these risks are complex and require a much deeper level of protection than what most organizations currently have in place.

Countering these threats effectively requires a more dynamic and adaptive security posture. Before diving deeper, having a solid understanding of artificial intelligence is a huge help. It provides the context needed to see why these models are so uniquely vulnerable. From there, adopting a modern security framework is non-negotiable. A great place to start is by learning what is Zero Trust security, a principle that’s all about verifying every single interaction within your AI ecosystem.

How Data Poisoning Corrupts AI Models from Within

When we think about AI security risks, data poisoning is one of the most insidious. It doesn't kick down the front door. Instead, it quietly slips poison into the well, corrupting the AI model from the inside out until a once-trusted tool becomes an agent of misinformation.

Think of it this way: imagine a chef who relies on a family recipe book. A rival sneaks in and carefully changes every "sugar" measurement to "salt." The next time the chef bakes a cake, they'll follow the instructions perfectly, but the result will be disastrous. The recipe—the foundation—is compromised. Data poisoning does the exact same thing to an AI's training data.

Attackers inject malicious, mislabeled, or just plain wrong data into the set an AI learns from. Since the model's entire job is to find and learn patterns, it diligently studies this tainted information, baking the flaws right into its core logic. The result is an AI that makes consistently wrong predictions once it’s out in the world.

Targeted vs. Indiscriminate Attacks

Not all data poisoning attacks look the same. Some are like carpet bombing, while others are like a sniper's shot. Each has a very different goal, and knowing the difference is crucial for spotting the threat.

An indiscriminate attack is all about creating chaos. The goal is simple: degrade the model's overall performance and erode trust in the system. An attacker might, for instance, flood a pet recognition model's training set with thousands of pictures of cats labeled "dog." The model becomes broadly inaccurate and, frankly, useless.

A targeted attack is much more subtle and, in many ways, more dangerous. Here, the attacker wants the model to fail in a very specific, predictable way.

- Creating Backdoors: Imagine a fraud detection model. An attacker could poison it to learn that transactions from a specific set of accounts are always legitimate, effectively creating a hidden backdoor for theft.

- Engineering Biased Outcomes: A loan approval algorithm could be skewed by injecting data that falsely links a certain zip code to high default rates. Suddenly, every applicant from that neighborhood is automatically denied.

- Trigger-Based Failures: This is where it gets really scary. A self-driving car's AI could be poisoned to see a specific, rare type of stop sign as a "go" signal. This creates a highly dangerous vulnerability that only activates under very specific conditions.

These targeted attacks are so tricky to find because the model works perfectly well 99% of the time. It only breaks when its specific trigger is pulled.

Real-World Consequences of Poisoned Data

A successful data poisoning attack can be anything from a minor annoyance to an absolute catastrophe. When the systems we count on for critical decisions are built on a foundation of lies, the fallout is serious.

Take a product recommendation engine on an e-commerce site. An attacker could poison its data to associate popular, everyday products with harmful or inappropriate items. Before you know it, the platform is recommending dangerous products to families, wrecking its reputation and opening itself up to major liability.

"As companies depend on accumulating more consumer data to develop products such as artificial intelligence... they may create valuable pools of information that are targeted by malicious actors." - Federal Trade Commission

Now, let's raise the stakes. Think about a medical AI designed to spot cancerous cells in scans. If its training data is poisoned with images where malignant tumors are mislabeled as benign, the AI will learn to ignore the very thing it's supposed to find. This could lead to missed diagnoses and devastating outcomes for patients who are putting their trust in that technology.

This is why securing the data pipeline is ground zero for AI security. It means putting rigorous data validation and anomaly detection in place and maintaining a clean chain of custody for every training set. Organizations also need to be proactively testing their models for these kinds of vulnerabilities. You can learn more about how to find these weak spots in our guide on penetration testing best practices. By treating training data as the critical asset it is, you can start building a real defense against this silent but potent threat.

Understanding Adversarial Attacks: How to Deceive an AI

While data poisoning is about corrupting an AI model from the inside out during training, adversarial attacks are all about fooling a fully trained, operational AI. Think of it as creating a sophisticated optical illusion for a machine. An attacker crafts an input—like an image or a sound file—that looks perfectly normal to us but is specially engineered to exploit the AI's learned patterns and blind spots, causing it to fail in spectacular ways.

Here’s a classic example. You show a cutting-edge image recognition AI a photo of a panda. It correctly identifies it. But then, a malicious actor adds a layer of what looks like random digital "noise" to the image. This pattern is so subtle it's completely invisible to the human eye.

The AI, however, now misidentifies the panda as an airplane with 99% certainty.

This happens because an AI doesn't "see" a panda like we do. It sees a complex map of data points. The adversarial noise is precision-engineered to nudge those data points just enough to push them over a decision boundary in the model's internal logic. In short, the attack reverse-engineers the AI's thinking process to craft the perfect deception.

Evasion Attacks: The Art of Real-Time Deception

The most common form of this threat is the evasion attack, which is designed to fool an AI during its normal, everyday operation. This is where the real-world consequences can get incredibly dangerous.

Let's take a self-driving car's computer vision system. An attacker could place a few small, specially designed stickers on a stop sign. To you or me, it's just a stop sign with some graffiti. But to the car's AI, the specific patterns on those stickers are enough to make it misclassify the sign as "Speed Limit 60 MPH," causing it to accelerate straight through an intersection.

Adversarial attacks exploit the gap between human perception and machine perception. An input that seems benign to us can be a clear, malicious command to an AI, turning a trusted system into a significant liability.

These attacks can be tailored to all kinds of AI systems:

- Facial Recognition: Someone could wear specially patterned glasses that make them invisible to a security camera or, even worse, cause them to be misidentified as someone else entirely.

- Voice Assistants: An attacker could embed a hidden, inaudible command inside a song or podcast. A human just hears background noise, but a voice assistant hears an instruction to "unlock the front door."

- Malware Detection: A virus could be slightly modified so its digital signature no longer matches what an AI security tool was trained to recognize, allowing it to slip past defenses completely undetected.

The sophistication here is growing alarmingly fast. A recent state of AI security report from Trend Micro reveals a sobering statistic: 93% of security leaders now expect to face AI-driven cyberattacks daily.

Model Extraction: Stealing the Secret Sauce

While evasion attacks manipulate an AI's output, extraction attacks have a far more insidious goal: to steal the model itself. This is a new kind of intellectual property theft where attackers create a functional clone of a proprietary AI model without ever accessing its internal code or training data.

So, how do they pull this off? They do it by repeatedly "pinging" the live model with inputs and carefully observing the outputs. It’s like a rival chef trying to steal a secret recipe. They don’t break into the kitchen; they just order the same dish hundreds of times, analyzing every single ingredient and flavor note until they can replicate it perfectly.

By analyzing how the model responds to thousands of queries, an attacker can train their own "student" model to perfectly mimic the "teacher" model's behavior. This allows them to steal years of R&D and valuable training data for a fraction of the cost. For firms in legal, finance, and healthcare that rely on specialized models, or those that use advanced natural language processing, this is a massive business risk.

2. Exposing Your Data Through Model Inversion and Extraction

So far, we've talked about tricking or corrupting AI. But what happens when the AI itself becomes the leak? Some of the most serious security risks don't just cause bad outputs—they directly compromise the sensitive data used to train the model in the first place, or even the model’s secret sauce.

This is where two particularly sneaky techniques come into play: model inversion and model extraction. Both represent a deep breach of trust, turning a helpful public tool into a source of private data leaks and a target for corporate espionage.

Model Inversion: The AI That Spills a Secret

Imagine a detective trying to reconstruct a crime scene they never saw. They can't ask the witness, "Tell me everything," but they can ask thousands of tiny, specific questions. "Was the lamp on? What color was the rug? Was there a window on the left?" Bit by bit, the answers allow the detective to piece together a surprisingly detailed picture.

That's exactly how model inversion works. An attacker doesn't have your training data, but they have access to your trained model. By repeatedly pinging the AI with carefully crafted queries and analyzing its responses, they can start to reverse-engineer the private data it learned from.

Think about a facial recognition model. An attacker could feed it a series of blurry or noisy images, tweaking them slightly until the model's confidence score for a specific person shoots up. The final result? A reconstructed, high-quality image of that person's face—even though their photo was supposed to be kept private within the training set.

Model inversion essentially turns your AI into an unintentional informant. The raw data might be locked down, but the model's learned patterns can be exploited to reveal confidential information, leading to serious privacy breaches.

The fallout from this can be huge, especially in regulated fields like healthcare or finance. A model trained on patient X-rays could inadvertently reveal medical images. A financial AI might leak clues about private transaction records. This doesn't just break customer trust; it can land you in hot water with regulators. It’s why a proactive review, like the process in this Data Protection Impact Assessment playbook, is critical for spotting these exposure risks before they become a real problem.

Model Extraction: Stealing Your Digital Blueprint

If model inversion is about stealing the data behind the AI, model extraction is about stealing the AI itself. Think of it as the digital equivalent of a competitor stealing your secret recipe without ever setting foot in your kitchen.

Here, the attacker treats your AI like a black box. They don't need the code or the training data. They just need to be able to use it. They systematically send a massive number of inputs to the model and meticulously log every single output.

With this huge question-and-answer dataset, they can train their own "student" model to perfectly mimic your "teacher" model's behavior. The goal is to create a functional clone that gives the same answers for the same inputs.

This is an incredibly dangerous attack for a few big reasons:

- Theft of Intellectual Property: Companies pour millions of dollars and countless hours into building their models. An extraction attack lets a competitor create a copycat model for a fraction of the cost—just the price of making API calls.

- Offline Vulnerability Testing: Once they have a perfect clone, attackers can hammer it with tests on their own servers to find weaknesses and adversarial exploits. They can find a way to break your system without you ever knowing they were looking.

- Loss of Competitive Advantage: That unique model predicting stock trends or analyzing legal documents is your edge. Once it's stolen and replicated, your advantage is gone.

Both of these attacks drive home a critical point: just securing the servers and databases around your AI isn't enough. The model itself is now a prime target, and what was once your greatest digital asset can quickly become your biggest liability.

Practical Strategies to Defend Your AI Systems

Knowing the risks AI systems face is half the battle; building a real defense is the other. It's not enough to just react when something goes wrong. You need a proactive, multi-layered security plan that protects your AI models from the moment you collect the data to the day you deploy them.

Securing the Foundation: Your Training Data

Let's start at the beginning. The most direct way to stop data poisoning is with strict data sanitization and input validation. Think of it as a bouncer at the door of your dataset. Before any piece of information gets in, it needs to be checked for anything that looks out of place—anomalies, statistical oddities, or anything else that smells like manipulation.

On top of that, you need a secure data pipeline with tight access controls. This ensures only trusted, authorized sources are feeding data into your model. It’s a one-two punch: filtering out bad data and locking down the pipeline to prevent it from getting there in the first place.

Building Resilience with Adversarial Training

Once your model is live, attackers will try to fool it. Your job is to train it to spot the tricks. That's where adversarial training comes in.

It’s a bit like giving your AI a vaccine. You deliberately show the model carefully crafted, malicious inputs designed to trick it. By teaching the model how to correctly identify these deceptive examples, you're building up its immunity. It learns to ignore the manipulative "noise" and focus on the real patterns, making it much tougher for an attacker to mislead it in the wild. It takes some serious computing power, but it's one of the best ways to harden a model against evasion attacks.

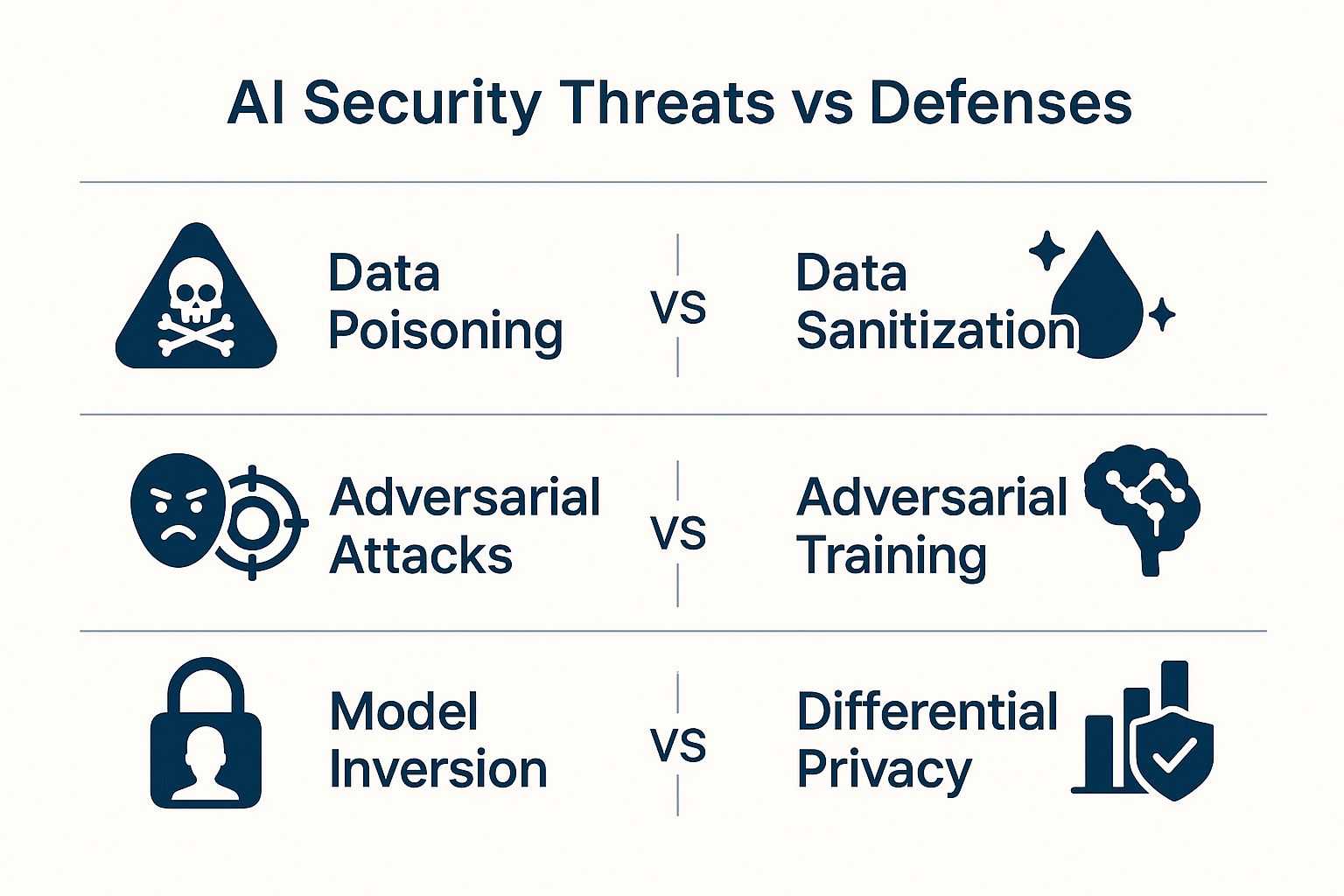

The infographic below shows how specific threats line up with their most effective defensive measures.

As you can see, there isn't a one-size-fits-all solution. A targeted defense, like using differential privacy to stop model inversion, gives you a clear roadmap for plugging specific security holes.

Protecting Data Privacy and Your Secret Sauce

When it comes to model inversion and extraction attacks, you're defending two things: your users' data and your company's intellectual property.

A powerful tool here is differential privacy. The idea is to add a small, calculated amount of statistical "noise" to the dataset before training. This random static is just enough to obscure individual data points, making it mathematically impossible for an attacker to reverse-engineer sensitive information from the model's responses. The best part? It doesn't really impact the model's overall accuracy.

To stop model extraction, you need to watch your API endpoints like a hawk. Things like rate limiting and query monitoring are your first line of defense.

By detecting and flagging an unusually high number of queries from one place, you can shut down an attacker before they collect enough information to replicate your model.

A secure Machine Learning Operations (MLOps) pipeline ties all of this together, embedding security into every step of the model's lifecycle.

- Continuous Auditing: Don't just test once. Regularly check your models for new vulnerabilities and biases that might creep in.

- Strict Access Controls: Use role-based access to make sure only the right people can tweak or even query your models.

- Version Control: Keep a detailed history of model versions and the data they were trained on. If something goes wrong, you can quickly roll back to a safe version.

The following table breaks down how these different strategies map directly to the threats we've discussed.

Mapping AI Threats to Effective Defense Strategies

To simplify, here’s a quick look at how common AI security risks pair with their primary and secondary defense tactics.

| AI Security Risk | Primary Mitigation Strategy | Secondary Defense Tactic |

|---|---|---|

| Data Poisoning | Data Sanitization & Validation | Strict Access Controls & Data Provenance |

| Adversarial Evasion | Adversarial Training | Input Reconstruction & Defensive Distillation |

| Model Inversion | Differential Privacy | Output Perturbation & Data Minimization |

| Model Extraction | API Rate Limiting & Monitoring | Watermarking & Regular Model Retraining |

| Insider Threats | Role-Based Access Control (RBAC) | Comprehensive Auditing & Anomaly Detection |

This mapping highlights the importance of a layered approach, where multiple defenses work together to protect the entire AI system.

Ultimately, defending your AI isn't a "set it and forget it" task. It demands constant vigilance, continuous improvement, and a smart, layered security strategy. To get more hands-on with validating your security measures, check out our guide on how to test security controls, which offers practical steps for putting your defenses to the test.

Navigating AI Security Governance and Compliance

Tackling the specific AI security risks we’ve covered is a huge part of the battle, but it's not the whole war. Technical defenses can only get you so far. To truly build a resilient AI program, you need a strong governance framework and a clear-eyed view of the ever-changing compliance landscape.

This kind of strategic oversight isn't just a "nice-to-have" anymore. As AI gets woven into the fabric of critical industries, governments and industry bodies are laying down the law to make sure these systems are built and used responsibly. This completely reframes AI security—it’s no longer just an IT problem, but a core business and legal imperative.

The Rise of AI Regulatory Frameworks

The good news is that you're not operating in a vacuum. Major institutions are stepping up to create guidelines that help standardize responsible AI development. Think of these frameworks as a blueprint for building systems that are not only effective but also fair, transparent, and secure from the get-go.

Leading the charge are organizations like the US National Institute of Standards and Technology (NIST) and the European Union Agency for Cybersecurity (ENISA). Their work is all about baking key principles into the entire AI lifecycle.

- Transparency: Making it possible to understand why an AI made a certain decision.

- Fairness: Actively hunting for and rooting out harmful biases in algorithms.

- Security-by-Design: Building security checks into the process from day one, not slapping them on as an afterthought.

Proactive compliance is more than just avoiding fines. It's about building fundamental trust with customers and partners, demonstrating that your organization is a responsible steward of both data and powerful technology.

This push for better AI security has real momentum. We're seeing major initiatives across the globe aimed at creating solid guidelines. For instance, key markets have rolled out high-profile policies like Dubai's AI Security Policy, Singapore's guidelines, the UK's AI Cyber Security Code of Practice, and NIST's detailed taxonomy of AI attacks. For a closer look at these global efforts, you can balance AI risks and rewards.

Why Proactive Compliance Is Non-Negotiable

Getting ahead of these emerging standards isn't just a good idea; it's critical for survival. For starters, the financial penalties for getting it wrong are getting seriously steep. But perhaps more importantly, a single security breach or a discovery of algorithmic bias can shatter a company's reputation, wiping out years of customer trust in a flash.

This is especially true in sensitive fields. With the growing use of AI voice recognition in healthcare, for example, navigating specialized compliance rules is absolutely paramount. In sectors that handle this kind of personal data, proving you follow established frameworks is the price of admission. By embracing these guidelines early, you can turn a potential risk into a real competitive edge, showing the world you're committed to safe and ethical AI.

A Few Common Questions About AI Security

Got more questions? You're not alone. Let's tackle some of the most common things people ask about keeping their AI systems safe.

Think of this as the rapid-fire round, where we distill the core ideas from our guide into quick, practical answers. We'll touch on the biggest threats, how to stay secure on a tight budget, and the classic open-source vs. proprietary debate.

These are the issues that come up time and time again, regardless of the industry.

What’s the Single Biggest Security Risk in AI?

If I had to pick just one, it would be data poisoning. It's so dangerous because it attacks the AI at its most vulnerable point: the learning phase.

An attacker subtly feeds the model bad, biased, or malicious data during training. The model then learns these flawed patterns as if they were truth, leading to skewed results that can range from unfair hiring decisions to completely missing the mark on fraud detection.

The scariest part? It often goes unnoticed until the damage is already done. You only realize there’s a problem when your AI starts making consistently bad calls.

Data poisoning is like secretly swapping a few key ingredients in a recipe. The final dish looks right, but it tastes all wrong, and you have no idea why.

How Can We Improve AI Security Without a Big Budget?

Good security doesn't have to break the bank. Small teams can make a huge impact by focusing on the fundamentals.

Start with your data. Before you even think about training, get serious about verifying your data sources and running simple validation checks. It's the cheapest, easiest first line of defense.

From there, lock things down with role-based access control. Not everyone needs the keys to the kingdom. Restrict who can train, query, or modify your models and their logs.

Here are a few low-cost, high-impact steps you can take:

- Use basic anomaly detection tools to sanity-check your datasets.

- Set rate limits on your APIs to automatically block suspicious, high-volume queries.

- Dip your toes into adversarial testing. There are great open-source libraries that let you probe your models for weaknesses without needing a dedicated security team.

Is It Safer to Use Open-Source or Proprietary AI Models?

This is the classic trade-off between transparency and control. There's no single right answer, as both have their pros and cons.

Open-source models have a huge advantage in community review. With thousands of eyes on the code, vulnerabilities are often found and fixed incredibly fast. But that same transparency can be a double-edged sword; if a flaw is discovered, attackers know exactly how to exploit it.

Proprietary models, on the other hand, are a black box. This obscurity can deter casual attackers, but it also means you're putting a ton of trust in the vendor. You're completely dependent on their security practices and patch schedules.

- Open-source models get quick, community-driven fixes, but their code is public, which can help attackers.

- Proprietary models keep their inner workings hidden from attackers, but you have to trust the vendor to keep you safe.

No matter which path you choose, the best practice is to vet the model rigorously and then build your own security guardrails around it. It's all about finding the right balance.

Get started with Whisperit by visiting Whisperit.