A Practical Guide to AI Due Diligence for Legal and Compliance Teams

So, what do we actually mean when we talk about AI due diligence? It’s the essential process of kicking the tires on an AI system—investigating its capabilities, risks, and compliance standing—before you bring it into your organization. This isn't just a surface-level check; it's a deep dive into the data, algorithms, security measures, and ethical implications. Think of it as a fundamental safeguard for any business looking to adopt AI responsibly.

The New Mandate for AI Due Diligence

The days of handing off new technology evaluations solely to the IT department are long gone. In today's world, AI due diligence is a core business function. It demands a seat at the table for legal counsel, compliance officers, and even healthcare professionals. The stakes are just too high to treat it as a technical afterthought.

Without proper vetting, you're walking into a minefield of risk. Imagine a hospital rolling out an AI diagnostic tool that hasn't been properly validated, only to face misdiagnoses and the legal storm that follows. Or a bank acquiring a fintech whose trading algorithm contains hidden biases, leading to discriminatory outcomes and a PR nightmare. These aren't just hypotheticals—they are the real-world consequences of cutting corners on due diligence.

From Liability to Strategic Asset

But a rigorous due diligence framework is about more than just dodging bullets. It’s also a powerful way to uncover hidden value and sharpen your competitive edge. During a merger or acquisition, for instance, a thorough review might show that a target's AI intellectual property is far more valuable than anyone initially thought. That kind of insight can completely change the valuation and dynamics of the deal.

When you approach it proactively, AI transforms from a potential liability into a genuine strategic asset. By putting clear vetting processes in place, you build confidence that any AI you deploy aligns with your organization's ethical and operational standards. For legal teams, this means looking for platforms that were designed with diligence in mind from the very beginning.

A solid diligence process brings several key benefits to the table:

- Reduced Legal Exposure: You can spot and fix compliance gaps with regulations like GDPR or HIPAA before they turn into expensive violations.

- Smarter Decision-Making: You get clear, evidence-based insights into an AI's true performance and limitations, which leads to better strategic choices.

- Stronger Security Posture: It ensures any AI tool meets your organization's data protection standards, especially when it handles sensitive client or patient information.

- A Sharper Competitive Edge: You can move faster and with more confidence on M&A deals and tech adoption, knowing the risks have been properly managed.

A well-structured diligence process does more than check boxes; it provides the strategic clarity needed to confidently integrate AI, turning potential risks into measurable business advantages.

Before we dive deeper into the step-by-step framework, it helps to understand the main pillars supporting a comprehensive due diligence process. Each component addresses a different facet of risk and requires collaboration across various teams.

Core Pillars of AI Due Diligence

| Pillar | Key Objective | Primary Stakeholder |

|---|---|---|

| Legal & Regulatory | Ensure the AI system complies with all relevant laws, regulations, and industry standards. | Legal & Compliance |

| Data & Model Integrity | Validate the quality, sourcing, and appropriateness of the training data and the model's performance. | Data Science & IT |

| Privacy & Security | Assess data handling practices, security protocols, and vulnerability to adversarial attacks. | CISO & Security Team |

| Bias & Fairness | Test for and mitigate algorithmic bias to ensure equitable and fair outcomes for all user groups. | Ethics & Risk Officers |

| Vendor & Contract | Review vendor credibility, support, and contractual terms, including liability and IP ownership. | Procurement & Legal |

This table gives you a high-level map of the territory we're about to explore. Each pillar is interconnected, and a weakness in one can create significant risk across the entire organization.

The global push for automation is undeniable. A recent survey found that 49% of businesses automate 11 or more compliance tasks, and 82% plan to increase their investment in this area. This trend underscores the urgent need for secure, compliant tools. A platform like Whisperit, for example, with its GDPR-aligned encryption and dedicated Swiss/EU hosting, shows how AI can be implemented securely, which in turn makes the diligence process much smoother for legal teams.

This kind of efficiency allows compliance officers to shift their focus from tedious manual work to high-level strategic risk assessment. It can turn the often-sluggish M&A process into a genuine business accelerator. To better understand the foundational principles behind this, explore our guide on AI governance best practices.

Defining Your Scope and Legal Framework

Diving into an AI assessment without a clear plan is a surefire way to miss critical risks and waste valuable time. Before you can even begin to evaluate an AI system, you have to know exactly what you're measuring it against. That's why any effective AI due diligence has to start by tailoring the review to the specific use case—this single decision shapes the entire investigation.

Think about it: an AI tool used for contract analysis in an M&A deal operates in a completely different risk universe than one providing diagnostic support in a hospital. The first carries massive commercial and IP risk, while the second is governed by ironclad patient privacy laws like HIPAA. If you don't make that distinction upfront, you’ll either get bogged down in irrelevant details or, worse, miss a major compliance gap.

So, your first real move is to map out the AI's intended function and every piece of data it will touch. This isn't optional; it's the foundation for everything that follows.

Crafting a Tailored Compliance Map

With the use case defined, it’s time to build a compliance map. This isn't some generic, off-the-shelf checklist. It's a precise inventory of the legal and regulatory obligations that apply directly to your situation. The regulatory landscape for AI is a messy patchwork of existing laws and new, jurisdiction-specific rules that are in constant flux.

For instance, if the system processes data from EU citizens, the General Data Protection Regulation (GDPR) immediately comes into focus. That means your diligence has to dig deep into data subject rights, the lawful basis for processing, and how data is transferred across borders. On the other hand, an AI tool for HR demands a close look at navigating AI ethics, EPPA compliance, and risk management in human resources to steer clear of discriminatory hiring practices.

A practical compliance map needs to cover several key domains:

- Jurisdictional Nuances: Which state, federal, and international laws apply? Regulations can shift dramatically from one region to another.

- Industry-Specific Rules: Are you in a heavily regulated field like healthcare (HIPAA) or finance (FINRA rules)?

- Data Privacy Obligations: What kind of personal or sensitive data is the system accessing, processing, or storing?

- Emerging AI Legislation: What new laws are on the horizon, like the EU AI Act or new state-level rules, that could affect the tool’s viability down the road?

A well-defined scope acts as your North Star throughout the due diligence process. It ensures your legal team asks the right questions from day one, focusing effort on the most critical risk areas for your organization.

This foundational work keeps your team from getting lost in technical rabbit holes and ensures the entire project stays on track.

Key Legal Considerations for Your Checklist

As you start to build your framework, you'll find that certain legal issues pop up again and again across different AI systems. The initial checklist you create should be a living document, designed to be adapted based on the unique risk profile of the AI you're evaluating.

Your team should be ready to ask some tough, pointed questions.

- Data Provenance and Rights: Where, exactly, did the training data come from? Do you actually have the legal right to use it for this purpose? It’s shocking how often this is overlooked. Using scraped web data or third-party datasets without the proper license is a common—and expensive—mistake.

- Intellectual Property Ownership: Who owns the model? The algorithms? The output? If the lines are blurry here, you're setting yourself up for serious IP disputes later on.

- Liability and Indemnification: When the AI makes a costly mistake—and at some point, it might—who is on the hook legally? Vendor agreements need crystal-clear clauses on liability, especially for high-stakes applications.

- Transparency and Explainability: Can the vendor explain how the model gets from A to B? For some regulations, a "black box" AI is a total non-starter. You might be legally required to explain an AI-driven decision to a customer or a regulator.

The legal ground here is anything but stable. For example, any organization operating in the U.S. needs to keep a close eye on what’s happening at the state level. Staying current on the specifics of the California AI law gives you a good read on where U.S. regulations are heading.

By hammering out this legal framework first, you build a solid, defensible foundation for your entire AI due diligence process.

Evaluating Data Integrity and Model Validity

At the end of the day, any AI due diligence comes down to one simple, critical question: can you actually trust the data? An AI model, no matter how complex its algorithms are, is just a mirror reflecting the information it learned from. If that foundation is shaky, biased, or incomplete, the model's outputs will be unreliable at best and dangerously wrong at worst.

For legal and compliance teams, this isn't some abstract technical problem—it's a massive risk area. You don’t need to be a data scientist to get to the heart of the matter. Your job is to ask the sharp, practical questions that peel back the layers and expose how the model was built and what data fuels it.

A great place to start is with data provenance. Think of it as the data’s chain of custody. You need to trace where the data came from, what happened to it along the way, and how it was changed. Without that clear history, you can't be sure the data is legitimate or that you even have the legal right to use it.

Unpacking Data Quality and Hidden Biases

Once you've established where the data came from, the real work begins: judging its quality. Bad data is the silent killer of AI projects. It can creep in from anywhere, so your diligence team needs to know what red flags to look for.

Here are a few key areas to dig into:

- Completeness: Are there big holes in the dataset? A classic example is an AI trained on contract data that's missing documents from certain states. That tool will inevitably stumble when it encounters a contract from one of those excluded areas.

- Accuracy: Does the data reflect the real world? Outdated or just plain wrong information will teach the model to make flawed predictions.

- Consistency: Is the data recorded in a uniform way? Something as simple as inconsistent date formats or different ways of writing company names can throw a model off and wreck its performance.

Understanding how to improve data quality is central to this whole process. But even a pristine, high-quality dataset can hide sneaky biases. Imagine a model built to predict litigation risk that was trained almost entirely on lawsuits involving huge corporations. It would likely misjudge the risks tied to smaller, independent cases completely. This is where your team's real-world experience is priceless—you can spot the blind spots that the raw numbers miss.

Confronting Model Drift and Validation

Here’s something many people forget: an AI model isn’t a one-and-done asset. Its performance will almost certainly degrade over time. We call this model drift, and it happens when the new, real-world data the model sees starts to look different from its original training data.

Think about an AI contract review tool trained on legal precedents from five years ago. New laws get passed, new cases set new precedents, and suddenly the model’s "knowledge" is out of date. Its analysis becomes less accurate, slowly but surely introducing significant risk into your workflow.

That’s why ongoing validation is non-negotiable.

A model that performed perfectly in a sterile lab environment means very little. The real test is whether it can perform reliably, day in and day out, in the messy, unpredictable reality of your business.

Your diligence must confirm the vendor has a solid process for monitoring, testing, and retraining their model. Look for clear documentation and audit trails that show exactly how the model has changed over time. A well-organized data environment, governed by a clear framework, is far easier to audit and trust. If you're looking to build one, our guide on creating a data governance framework template can help. Without this oversight, you’re flying blind and just hoping the model is still doing its job correctly.

Auditing for Security, Privacy, and Bias

Once you’ve confirmed the integrity of the data, the real work begins. A thorough AI due diligence process has to go deeper, probing the system’s defenses against both outside attacks and internal blind spots. This is where security, privacy, and bias audits come into play—a critical trio of risk assessments that protect not just your organization, but your clients as well. An AI tool is only as strong as its weakest link, and these audits are designed to find those links before they snap.

This isn’t about just ticking boxes on a GDPR checklist. It requires a hands-on, almost adversarial approach to test the system’s real-world resilience. You need to verify that sensitive information is protected with robust encryption, not just when it's sitting on a server but also while it's in transit. I’ve seen too many systems with great at-rest encryption fall apart because of weak in-transit protocols. You also have to scrutinize access controls to ensure only the right people can see or touch critical data, preventing both accidents and outright theft.

Probing for Vulnerabilities and Data Leaks

A proper security audit means actively trying to break things. You’ll want to work with your technical team or bring in a third-party expert to run penetration tests and vulnerability scans. The whole point is to simulate an attack to see how the AI system reacts. Does it rely on software components with known security flaws? What happens when you feed it strange inputs designed to confuse the model and spit out something it shouldn't?

At the same time, you have to test privacy controls for potential data leakage. This is a huge risk. For instance, an AI model trained on sensitive client emails could accidentally regurgitate confidential details in its responses. In the legal world, where client confidentiality is sacrosanct, that’s a nightmare scenario.

Here are a few key areas I always focus on:

- Data Minimization: Is the system a data hoarder? It should only collect and keep what is absolutely essential for it to function.

- Data Subject Rights: If a user asks to see, fix, or delete their data, is there a clear, reliable process in place? This is a must-have for regulations like GDPR.

- Anonymization Techniques: If the system claims to use anonymized data, are the methods actually strong enough to prevent someone from re-identifying individuals?

For a more structured approach, our guide on conducting a data privacy impact assessment provides a framework that fits perfectly here.

Uncovering and Mitigating Algorithmic Bias

Algorithmic bias is one of the most insidious risks in any AI system. An AI model is a direct reflection of its training data. If that data contains historical prejudices—and most large datasets do—the model will not only learn them, it will amplify them. This can lead to discriminatory outcomes that create legal and reputational disasters.

Think about an AI tool a bank uses to screen loan applications. If its training data shows that fewer loans were historically approved for people in certain zip codes, the AI might learn to unfairly penalize new, creditworthy applicants from those same areas. Or consider a hiring tool trained on resumes from a male-dominated field; it might systematically downgrade highly qualified female candidates without anyone noticing.

Testing for this requires a dedicated effort:

- Analyze Demographic Representation: Scrutinize the training data. Is it balanced and truly representative of the population the AI will serve?

- Conduct Fairness Testing: Run simulations using different demographic profiles to see if the model produces fair and equitable outcomes across all groups.

- Evaluate for Disparate Impact: Check if the model's decisions disproportionately harm a protected class, even if there's no direct discriminatory intent.

A purely automated system, no matter how advanced, cannot grasp the full context of human decisions. This is why a human-in-the-loop is not a luxury—it’s an essential safeguard for catching nuanced errors and biases that automated tests will always miss.

Legal professionals are adopting AI at a remarkable clip, with 77% using it for tasks like document review. And yet, a staggering 63% of financial leaders say data security is a major barrier to wider adoption. That disconnect is exactly why security officers must rigorously vet any new tool. They have to ensure systems have robust protections, like Whisperit's end-to-end encryption and GDPR-aligned controls, long before they're deployed in sensitive legal environments. You can get more perspective on how AI is transforming the legal profession on the Thomson Reuters blog.

This next table offers a simple way to start organizing and prioritizing the risks you uncover.

AI Risk Assessment Matrix

This sample matrix can help your team identify, categorize, and prioritize risks found during the due diligence process. It’s a starting point to turn abstract findings into an actionable plan.

| Risk Area | Potential Impact (Low/Med/High) | Likelihood (Low/Med/High) | Mitigation Strategy |

|---|---|---|---|

| Data Privacy | High | Medium | Implement strict access controls; conduct regular DPIAs; use anonymization. |

| Algorithmic Bias | High | High | Diversify training data; implement fairness testing; require human oversight. |

| Security Vulnerabilities | High | Medium | Conduct regular penetration testing; maintain a software bill of materials (SBOM). |

| Model Inaccuracy | Medium | Low | Establish performance monitoring; create a retraining schedule. |

| Regulatory Non-Compliance | High | Low | Maintain up-to-date compliance checklists; consult with legal experts. |

| Intellectual Property Leaks | High | Low | Enforce strict data handling policies; use data loss prevention (DLP) tools. |

By mapping out risks this way, you can focus your resources where they’re needed most, ensuring that your AI adoption strategy is both ambitious and responsible.

7. Locking Down Vendor Diligence and Contracts

Let's be realistic: most companies aren't building their own AI from the ground up. They're bringing in tools from third-party vendors. This completely changes the game for AI due diligence. Your focus pivots from scrutinizing internal code to launching a rigorous investigation into the partners you bring into your ecosystem.

Vetting AI vendors is far more than a procurement checkbox. It's a critical risk management function. Get it wrong, and you could be facing serious legal, financial, and reputational blowback. A quick once-over just won’t do the job here. You need a disciplined approach that pairs deep, pointed questions with contractual safeguards that are rock-solid.

It all starts with a detailed vendor questionnaire, one that goes way beyond the standard security questions. You have to probe the very core of how their AI system was designed and how it operates day-to-day.

Designing a Vendor Questionnaire That Gets Real Answers

Think of your questionnaire as your primary evidence-gathering tool. It’s how you get vendors on the record about their practices, creating a baseline you can use for your assessment and hold them accountable to later. A flimsy questionnaire just invites vague, non-committal answers and a false sense of security. A tough one, on the other hand, gives you the clarity to make a truly informed decision.

Make sure you hit these key areas hard:

- Model Transparency and Explainability: How exactly was this model trained, validated, and tested for bias? Force them to explain how their model reaches its conclusions, especially for decisions that carry significant weight.

- Data Lineage and Rights: Where did the training data come from? Get specifics. You need to know how they ensure they have the legal right to use it. What are their documented policies for data retention, anonymization, and, just as importantly, deletion?

- Security and Compliance Proof: Do they have certifications like SOC 2 or ISO 27001? Don't just take their word for it; ask for the documentation and audit reports to back it up.

- Incident Response and Notification: What’s the plan when something goes wrong, like a data breach or a major model failure? The process needs to be documented, with specific timelines for when they are required to notify you.

A well-designed questionnaire is the first step in creating vendor accountability. If you're building out your own framework, digging into a comprehensive vendor security assessment questionnaire is a great place to start so you don’t miss anything crucial.

Must-Have Contractual Safeguards for Any AI Agreement

After you've vetted a vendor, the real work begins at the negotiation table. This is where you translate your diligence into legally binding protections. Your standard Software-as-a-Service (SaaS) agreement is almost certainly not up to the task. The unique risks tied to AI—from algorithmic bias to massive data privacy failures—demand specific, carefully drafted clauses that leave no room for ambiguity.

Your legal team needs to go to the mat for these provisions in any AI vendor contract.

- Ironclad Data Ownership and Usage Rights: The contract has to state, unequivocally, that you own your data and any insights or outputs generated from it. It also needs to severely restrict the vendor’s ability to use your data to train their models without your explicit, written consent. This is non-negotiable; it stops your confidential information from becoming part of their core product.

- Liability for AI-Driven Mistakes: When the AI messes up and causes financial loss or reputational harm, who pays the price? The contract must have crystal-clear language on indemnification, spelling out the vendor's liability for errors, biased outcomes, and security breaches that start on their end.

- Unrestricted Audit and Testing Rights: You must have the contractual right to audit the vendor’s security controls. For some systems, you may even need the right to directly test the AI model for performance drift and bias. This clause isn’t just for onboarding; it ensures you have a lever for ongoing transparency.

- A Clean Exit Strategy and Data Portability: What happens when you part ways? The agreement has to lay out a clear, step-by-step process for the vendor to securely return or destroy your data. It should also guarantee you can move to a new system without being held hostage by proprietary formats.

If these clauses are missing, you are essentially agreeing to take on a host of unknown risks on the vendor's terms. Strong contracts aren't about a lack of trust; they're about creating the clarity and accountability needed for a partnership to work safely.

Imagine a healthcare provider vetting an AI diagnostic tool. The stakes are incredibly high. That contract absolutely must include a Business Associate Agreement (BAA) and specific language covering HIPAA compliance, the protection of patient data, and who is liable for clinical errors influenced by the AI’s output. Coming to the table armed with this knowledge is the only responsible way to bring AI into your organization.

Making Diligence Part of Your Daily Workflow

Running a solid AI due diligence check is one thing, but actually embedding it into your team's day-to-day work is a whole different ballgame. The real trick is to weave these checks and balances into your existing operations so they become second nature.

Let's be honest, a haphazard approach just doesn't cut it. When evidence is scattered across email chains and your findings are buried in a dozen different Word docs, you're not just creating a headache—you're creating risk. Trying to defend that process later on? Good luck.

The key is to stop treating diligence as a one-off project. Instead, think of it as a continuous workflow managed from a central hub. This shifts the entire process from a messy, manual slog to a structured, efficient system. You’re essentially creating a single source of truth for every AI assessment you run.

Building a Central Hub for Diligence

This integrated workspace acts as the command center for every diligence project. It's not just a fancy folder for documents; it's a dynamic project management tool. Imagine tracking a vendor review from a single dashboard, with their security questionnaire, contract, and all communications right there at your fingertips. This gives legal and compliance teams the complete visibility they need.

What’s really interesting is that you can use AI to help with the diligence process itself. A legal team, for instance, could use an AI assistant to quickly scan a vendor's massive terms of service agreement. The assistant can instantly flag sketchy clauses on data ownership or liability, saving hours of manual review. Using "AI on AI" like this not only speeds things up but also lets your experts focus on the truly thorny issues.

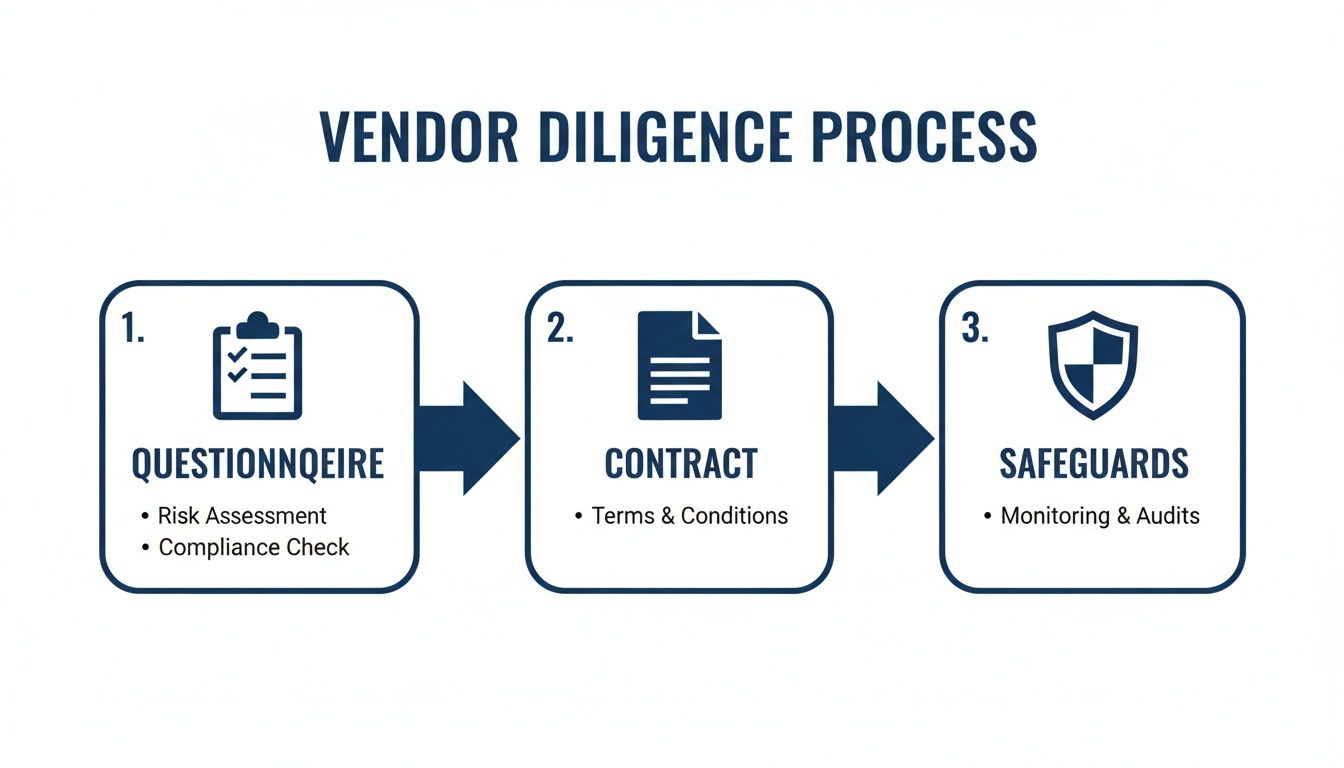

This flow chart breaks down the core stages you'd typically see when vetting a new vendor.

As you can see, a sound workflow moves logically from the initial questionnaire to a deep dive on the contract, making sure every safeguard is locked in before you give the green light.

Adopting this systematic approach brings some immediate wins:

- Consistency: Every review follows the same playbook. Nothing critical gets skipped.

- Efficiency: Automating evidence capture and reporting frees up your team from mind-numbing manual tasks.

- Defensibility: You automatically get a complete, time-stamped audit trail. This creates a rock-solid record of your diligence efforts if you ever need to prove it.

By baking diligence directly into your workflow, you build a system that is repeatable and, most importantly, defensible. This doesn't just lower your risk; it gives your organization the confidence to embrace new AI tools faster.

Ultimately, this methodical integration changes the entire conversation around AI adoption. Diligence stops being a roadblock and starts being a strategic advantage. It provides the clear, evidence-based green light needed to make decisions with confidence.

Platforms like Whisperit, designed as a central workspace for legal work, are built on this very principle. They bring all the necessary tools for secure evidence management under one roof, allowing legal teams to keep a tight grip on oversight without slowing everyone else down.

Common Questions About AI Due diligence

Let's be honest, setting up a formal AI due diligence process can feel overwhelming. It often sounds like a massive undertaking, especially when everyone is already swamped. But getting started is usually the hardest part.

The best way to kick things off is by pulling together a small, cross-functional team. This can't just be a legal or IT initiative. You need people from compliance, the business unit that will actually use the tool, and ideally, a sponsor from leadership. The first goal isn't to boil the ocean; it's to create a practical, risk-based framework. This document should clearly state how much risk the company is willing to take on with AI and lay out a consistent process for vetting new tools before they go live.

How Do We Handle the Technical Side Without an Army of Data Scientists?

This is a big one. You don't need a Ph.D. in machine learning to do this right. The goal isn't to reverse-engineer the vendor's model. It's about asking smart, targeted questions that force transparency and good documentation.

Start by creating a standard questionnaire that you can send to any AI vendor. Make sure it covers the non-negotiables:

- Data Provenance: Be specific. Ask where the training data came from. Do they have clear, legal rights to all of it?

- Model Validation: How, exactly, was the model trained and tested? What did they do to check for performance issues and potential bias?

- Security Protocols: What measures are in place to safeguard the data the model interacts with? Don't accept vague answers.

For really critical systems—think anything involved in hiring, lending, or healthcare—it's worth bringing in a third-party expert to double-check the vendor's answers.

AI due diligence is not a one-time, check-the-box activity. Models drift, regulations change, and new risks emerge. It has to be an ongoing process.

This means building a re-evaluation schedule into your governance plan from day one. For high-risk systems, like a tool that assists with clinical diagnoses, you should be looking at it at least once a year or after any major update. For less critical tools, every 18-24 months is a reasonable cadence.

Having a structured, defensible process is your best defense against AI-related risks. A tool like Whisperit can give you a central workspace to manage all this—from capturing evidence and sending vendor questionnaires to generating reports. It helps legal teams keep a tight rein on the process without becoming a roadblock to innovation. Learn how Whisperit can support your AI due diligence framework.